Neuroscience is constantly evolving as new methods to collect, analyze and model neural measurements are being developed. One such development has been the use of deep neural networks (DNNs) as models of biological neural networks, in particular the ventral stream of the primate visual system. This approach has gained popularity during a data-driven era of neuroscience where emphasis has been placed on collecting and integrating more (more cells, more regions, more trials) and better (higher resolution, higher signal-to-noise ratio)data than ever before. However, it has also become clear that data alone can’t push neuroscience forward. The data is important but what is the data for?

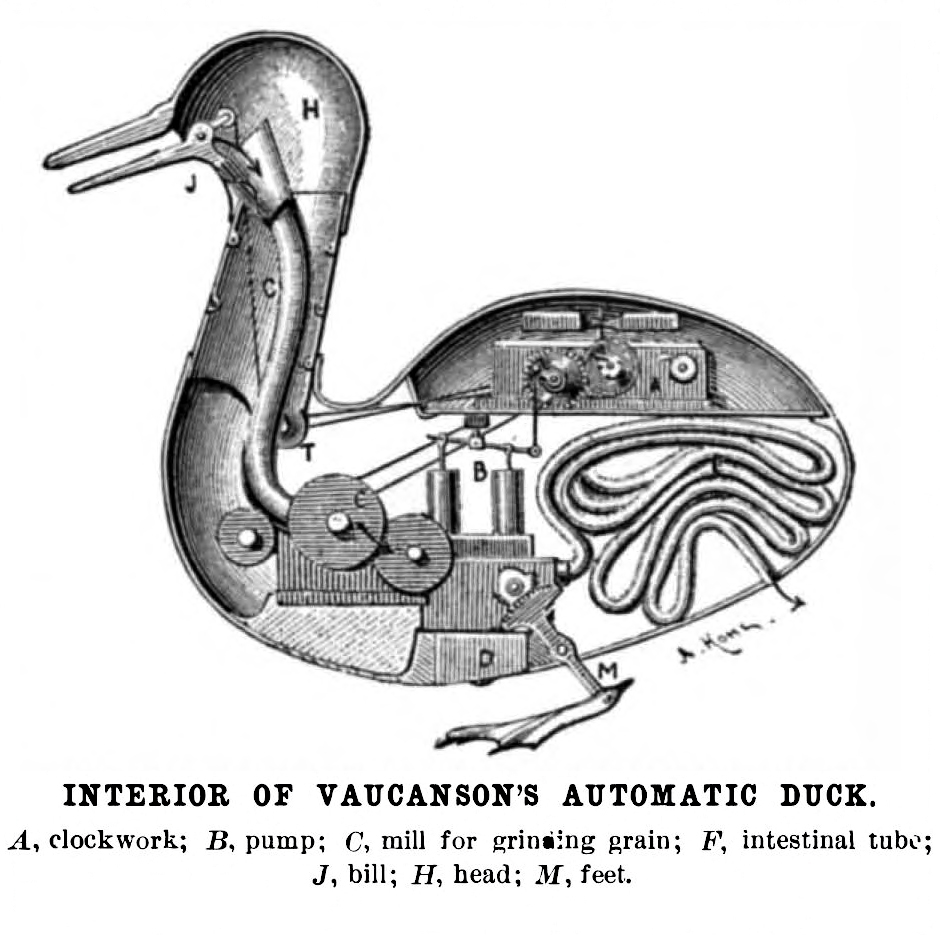

One approach has been to build models that are able to predict neural activity while an animal is experiencing some task or stimuli. Traditionally, a model would be designed given what is already known about the system of interest and the researchers hypotheses about neural function. Modern DNNs, on the other hand, though originally inspired by biological neural networks, were designed to solve computer vision problems independent of any knowledge or specific hypotheses about neural function. That such networks trained to recognize objects in images learned representations that were similar to those found in the primate ventral stream caused much debate in the neuroscience community. Jim DiCarlo, one of the leading researchers in this area, has described his approach as turning the scientific problem of neuroscience into an engineering one where the primary goal is to optimize the accuracy of predictive models (Di-Carlo, 2018). Competitions such as the Algonauts project (Cichy et al., 2019) and BrainScore (Schrimpf et al., 2018) seek to identify the models that achieve the best score on standardized tasks, akin to engineering competitions such as Kaggle. The approach of ‘Predict, then Simplify’ prescribes first building a predictive model and then trying to explain why it successful (Kubilius, 2017). Critics of this approach have essentially claimed that these DNN similarity results don’t count as scientific progress, at least not in the same way that the results of traditional modeling studies do, because the models them selves are “uninterpretable” or biologically implausible (Kay, 2017). The approach has been also criticised as replacing one black box with another, i.e., modeling one thing we don’t understand with another that we also don’t understand (Middlebrooks, 2019). This criticism implies that no scientific progress has been made. How can the predictive performance of representations learned in DNNs tell us anything about the brain when they don’t encode specific hypotheses about neural function?

Many of the conversations alluded to above start by asking what would it mean to understand the brain?: e.g., the paper, “What does it mean to understand a neural network?” by Lillicrap and Kording (2019) or the Challenges and Controversies session, “What it would mean to succeed at understanding how cognition is implemented in the brain” at the 2018 conference on Computational Cognitive Neuroscience. Are these the right questions to be asking if we’re concerned with how our science progresses? In philosophy, understanding is viewed as a type of personal, cognitive achievement (Grimm and Hannon, 2014). When an individual comes to understand a language, another person, a proof or a scientific theory, it is a personal achievement. What role does understanding play in scientific progress? As scientists, are we not more interested in the products of scientific enterprises than in the cognitive achievements of individuals?

To begin, we assume that science eventually makes progress towards its goals. This doesn’t imply that science proceeds directly to its goals or that all its goals are achievable, but it does imply that the activity of doing science is more than just going in circles—that science moves towards something. Some applied sciences will have very clearly defined goals, such as improving patient outcomes for medical research. In the absence of clear applied research goals, many contemporary scientists and philosophers would agree that one the primary goals of fundamental science, of science for science’s sake, is to explain, i.e., to provide explanations of the phenomena that are the focus of scientific study.

So then, if we want to understand how fundamental science progresses, we need to know what constitutes a scientific explanation. This has been a central question for philosophers of science over the past century. The goal of this line of inquiry is to identify the norms of scientific explanation. In the analytic philosophy tradition, this amounts to identifying the necessary and sufficient conditions under which something may be deemed a successful scientific explanation.

Around the mid twentieth century, philosophers who tackled this questions ought to identify a universal and objective logic of scientific explanation us-ing what has been called an a priori approach, which reflects the belief that philosophy of science can pass judgement on and discern the rules of science from a non-scientific view point. Philosophers looked to physics as the model science and tried to develop general theories of explanation that would account for all scientific explanations. As will be discussed in more depth later, proposals included that explanations are deductive arguments based on laws of nature(deductive-nomological model) and that explanations are collections of statistical relevance relationships (statistical relevance model). These works viewed science as providing an objective window into truth and assumed that it must have a clear and universal set of rules. This enterprise is largely considered to have failed to achieve its goal as each theory has a number of counter examples for which it is unable to account.

In contrast, the field of science studies tackles the subjective aspects of science. Science is performed by bias-laden humans in a particular social and historical context which will affect what questions get asked, how science is per-formed, and how its results are presented and received. An extreme view on explanation within this tradition is that scientific explanation is simply what-ever scientists find to be explanatory at a given time and place—that there is no objective notion of explanation independent from its social and historical con-text. This view is unsettling to many scientists and philosophers who want to believe that there is something special about science compared to other ways of knowing. How can we theorize about science in general if everything is relative?Wesley Salmon writes,

First, we must surely require that there be some sort of objective relationship between the explanatory facts and the fact-to-be-explained. . . Second, not only is there the danger that people will feel satisfied with scientifically defective explanations; there is also the risk that they will be unsatisfied with legitimate scientific explanations . . . The psychological interpretation of scientific explanation is patently inadequate (Salmon, 1984, pg. 13).

Similarly, Carl Craver writes, “All scientists are motivated in part by the pleasure of understanding. Unfortunately, the pleasure of understanding is often indistinguishable from the pleasure of misunderstanding. The sense of understanding is at best an unreliable indicator of the quality and depth of an explanation” (Craver, 2007, pg. 21).

The philosopher of science assumes that explanations of natural phenomena exist, regardless of whether scientists are ever satisfied with them. In the later part of the twentieth century, after several failed attempts at a universal theory of scientific explanation, a naturalized philosophy of science emerged, where naturalized here refers to subsumption under the natural sciences. This naturalization is attributed in part to W.V.O. Quine who proposed that the project of epistemology is actually the “scientific inquiry into the processes by which human beings acquire knowledge” (Bechtel, 2008). Naturalized philosophy rejects the a priori approach and instead employs an interdisciplinary approach including methods from history, anthropology and psychology. This rejection of the a priori approach does not imply abandoning the normative goals of philosophy of science. This new philosophy of science has both descriptive and normative goals where a descriptive goal (how science is) maybe considered the first step towards a normative goal (how science ought to be) (Craver, 2007). Historical explanations can be evaluated on pragmatic terms; we can look at what an explanation facilitated—what new technology, medicine, control or future research was enabled by the explanation? A naturalized philosophy of explanation still assumes that there are good and bad explanations but uses different methods to go about elucidating the difference.

The naturalized approach acknowledges the context-specific elements of scientific progress and recognized that not all sciences look like physics. One consequence of adopting the naturalist perspective is the recognition that not all sciences are the same. Many of the traditional philosophical ideas about science, whether developed by philosophers pursuing the a priori approach or the naturalist approach, were most applicable to domains of classical physics. But, starting in the 1970s and 1980s, certain philosophers who turned their attention to the biological sciences found that these frameworks did not apply all that well to different biological domains. Recognizing this, the naturalist is committed to developing accounts that work for specific sciences, postponing the question of determining what is in common in the inquiries of all sciences. In this sense the naturalist is led to be a pluralist (Bechtel, 2008). Pluralist here refers to someone who accepts that there may be many forms of explanation that are equally valid in their own domains.

Neuroscience, being highly interdisciplinary, is not clearly separated from its sister sciences: biology, physics, psychology, artificial intelligence (AI). Thus, the search for a universal theory of explanation in neuroscience may be as ill-fated as the original quest of a theory of explanation for all science. We propose instead, that explanations are phenomenon-specific. Classes of phenomena, regardless of which domain of science they belong to, will share a particular form of explanation.

This view can be especially unifying at the intersection of neuroscience and AI where the behaviour of artificial systems are designed to mimic human and animal behaviour. Thus, to the extent that an artificial system and a biological system display the same phenomenon (e.g. recognizing faces), the form of the explanation of that phenomenon will be the same. To be clear, the previous statement does not imply that the explanations themselves will be the same, but that what constitutes an explanation in both cases will be the same (to the extent that they demonstrate the same phenomenon).

Within this framework, it becomes very important to clearly identify and delineate the phenomena to be explained. How a scientist conceptualizes the phenomenon to be explained may bias them towards one form of explanation or another. This is especially apparent in cognitive neuroscience which deals with the difficult task of “understanding how the functions of the physical brain can yield the thoughts and ideas of an intangible mind” (Gazzaniga et al.,2014). In the philosophy of mind, there are many different perspectives on the nature of mind and cognition. Is cognition computation? Are cognitive agents embodied dynamical systems? We cannot completely separate the ontological question (What is cognition?) from the epistemological question (How do we explain cognition?). Thus, a commitment to a particular theory of explanation in neuroscience may also suggest a related commitment to a theory of cognition.

What are the philosophical questions most relevant to contemporary debates at the intersection of AI and neuroscience? If we’re interested in how our science progresses, then we’re interested in explanation rather than understanding. We may colloquially use ‘understand’ to stand in for ‘explain’ or to refer generally to the acquisition of knowledge, but the difference between the terms is important in formal arguments. Thus, when Lillicrap and Kording (2019) use ‘understanding’ consistent with its accepted definition in philosophy to refer to a personal achievement state, their arguments do not imply anything about scientific explanation. They emphasize compactness and compressibility in their proposal for what it means to understand a neural network because humans are only able to argue about compact systems. According to their view, any meaningful understanding of a neural network must be compressible into an amount of information that a human can consume, e.g.a textbook. They and many others often phrase the question as being about how to understand or explain ‘the brain’ or ‘a neural network’, but science isn’t in the business of explaining objects. Science may produce descriptions or characterizations of objects, but explains phenomena. Since the form of a satisfactory explanation may depend on the phenomena to be explained and the fact that the brain participates in a plethora of phenomena at many different scales, we will likely never arrive at a unitary answer for what it means to explain ‘the brain’. If we are concerned with how a new science of intelligence might progress, let us not ask how to understand the brain. Let us ask what are the specific phenomena of interest at the intersection of artificial and biological intelligence and how ought such phenomena be explained? The inability of the human cognitive system to simultaneously conceptualize 100 trillion synapses may indeed inform our decisions as scientists, but it need not constrain our theories of the explanation of neural phenomena.

Great caution must be exercised when we say that scientific explanations have value in that they enable us to understand our world, for understanding is an extremely vague concept. Moreover—because of the strong connotations of human empathy the word “understanding” carries—this line can easily lead to anthropomorphism. (Salmon, 1989)

We assume that an explanation (or multiple explanations) exist, regardless of whether a human scientist can ever grasp it.