I recently presented a poster entitled ‘The effect of task and training on intermediate representations in convolutional neural networks revealed with modified RV similarity analysis’ at the Conference on Cognitive Computational Neuroscience (CCN) in Berlin, Germany.

The modified RV coefficient (RV2) is a particular case of centered kernel alignment (CKA) which was recently discussed at length in an ICML workshop paper by Simon Kornblith, who also gave a presentation at ICLR2019.

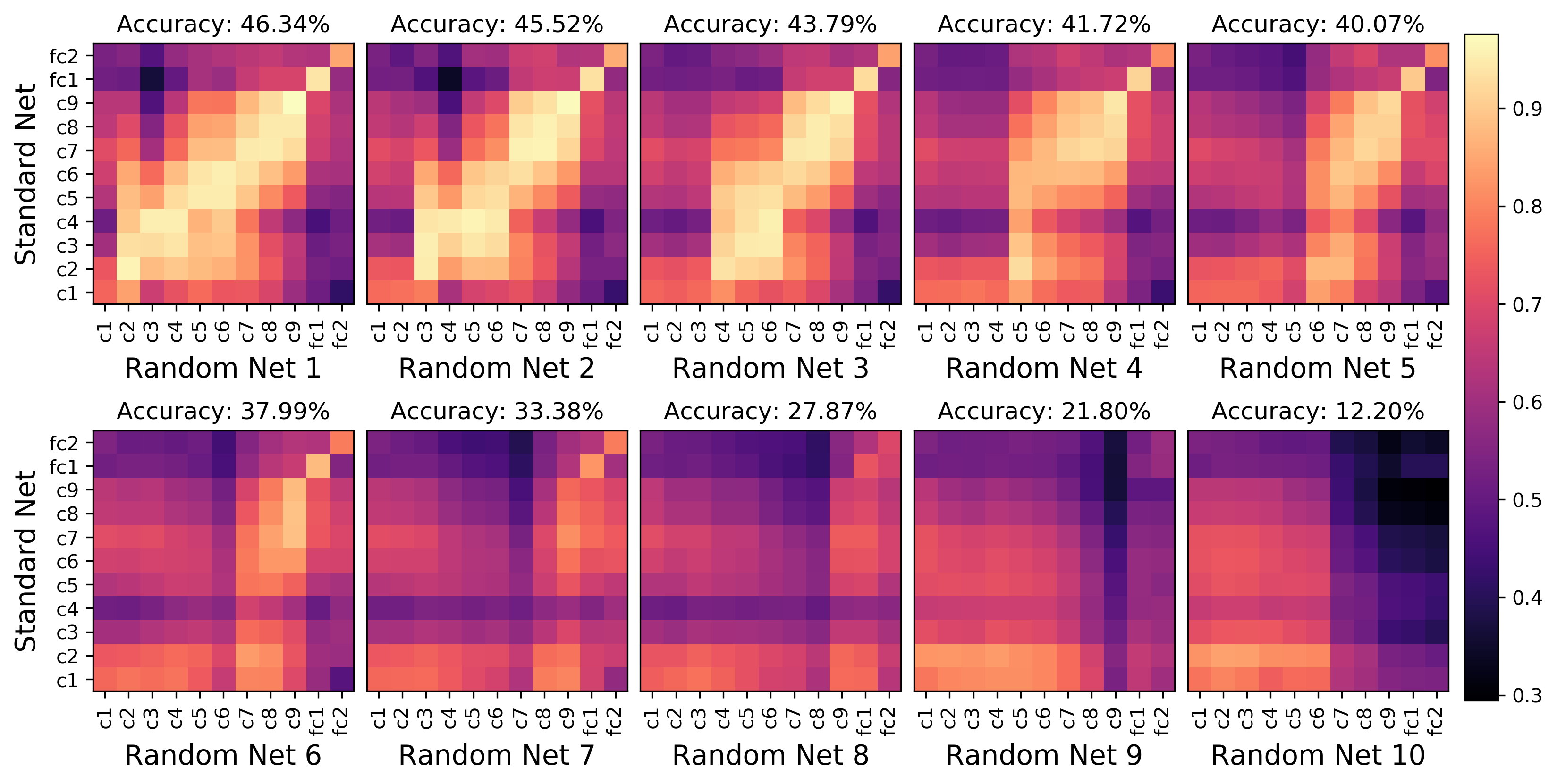

We used RV2 to compare activation patterns in networks of identical architecture trained in different ways on a transfer learning task. In particular, we investigated why freeze training achieves superior transfer performance, above and beyond similar transfer networks where only the final layers are trained on the target task.

My CCN paper is now on arXiv