Science for Social Good

12 Aug 2025

Recorded Talks

02 Jul 2021

I recently gave a couple of educational tutorials which were recorded:

Epistemic diversity in a unified neuro-AI

02 Feb 2021

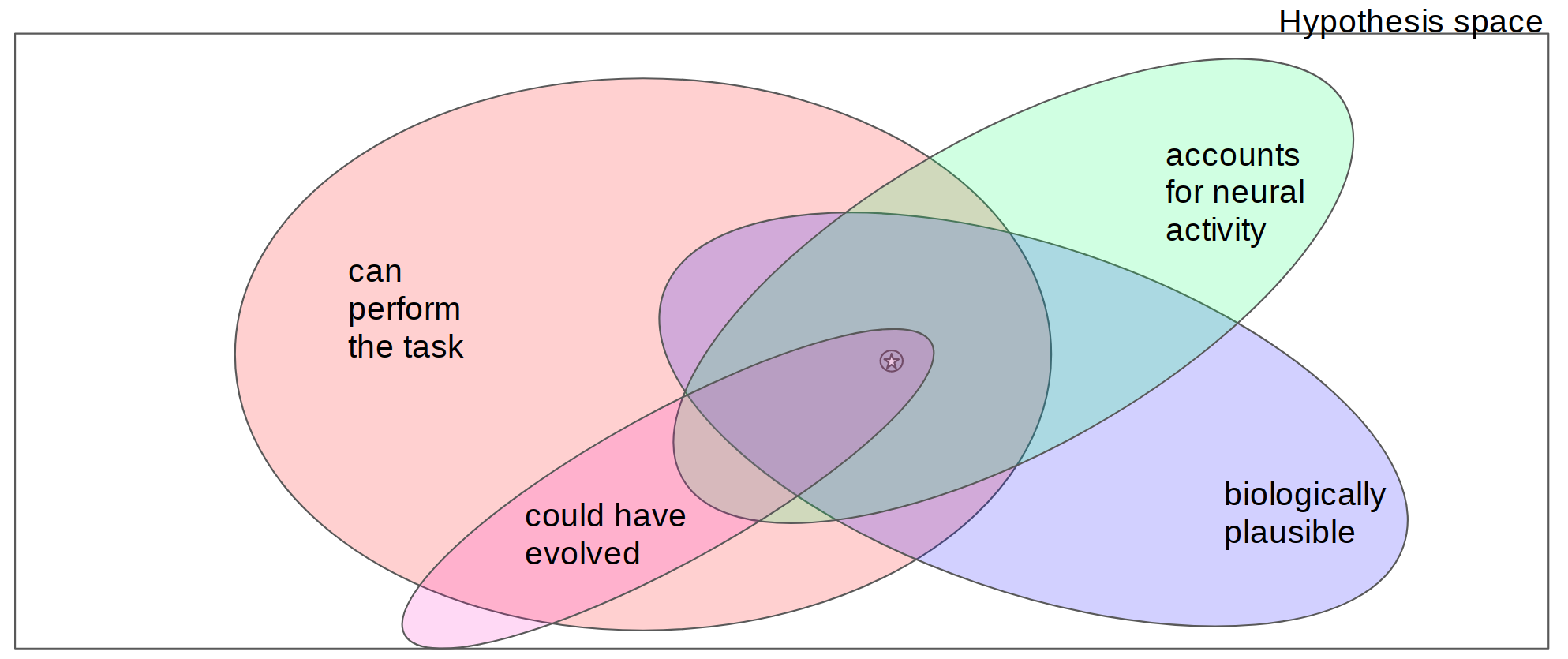

Figure 1. Models lie at the intersection of one or more constraints. The rectangle indicates the space of all possible models where each point in the space represents a different model of some phenomenon. Regions within the coloured ovals correspond to models that satisfy specific specific model constraints (where satisfaction could be defined as passing some threshold of a continuous value). If a constraint is well-justified, this implies that the true model is contained within the set of models that satisfy that constraint. Models that meet more constraints, then, are more likely to live within a smaller region of the hypothesis space and hence will be closer to the truth, indicated by the star in this diagram.

Figure 1. Models lie at the intersection of one or more constraints. The rectangle indicates the space of all possible models where each point in the space represents a different model of some phenomenon. Regions within the coloured ovals correspond to models that satisfy specific specific model constraints (where satisfaction could be defined as passing some threshold of a continuous value). If a constraint is well-justified, this implies that the true model is contained within the set of models that satisfy that constraint. Models that meet more constraints, then, are more likely to live within a smaller region of the hypothesis space and hence will be closer to the truth, indicated by the star in this diagram.

asking the right questions

31 Mar 2020

Neuroscience is constantly evolving as new methods to collect, analyze and model neural measurements are being developed. One such development has been the use of deep neural networks (DNNs) as models of biological neural networks, in particular the ventral stream of the primate visual system. This approach has gained popularity during a data-driven era of neuroscience where emphasis has been placed on collecting and integrating more (more cells, more regions, more trials) and better (higher resolution, higher signal-to-noise ratio)data than ever before. However, it has also become clear that data alone can’t push neuroscience forward. The data is important but what is the data for?

99% python fMRI processing setup

02 Aug 2019

EDIT: my nistats pull request related to this blog post was merged!

Commentary on Brette

14 Mar 2019

I recently responded to the open call for commentary on Romain Brette’s article “Is coding a relevant metaphor for the brain?”, to be published in Behavioral and Brain Sciences. Philosopher Corey Maley and I submitted the following proposal. However, we were not invited to submit our commentary. Here is the brief synopsis of what we had planned to comment on:

NeurIPS 2018

01 Dec 2018

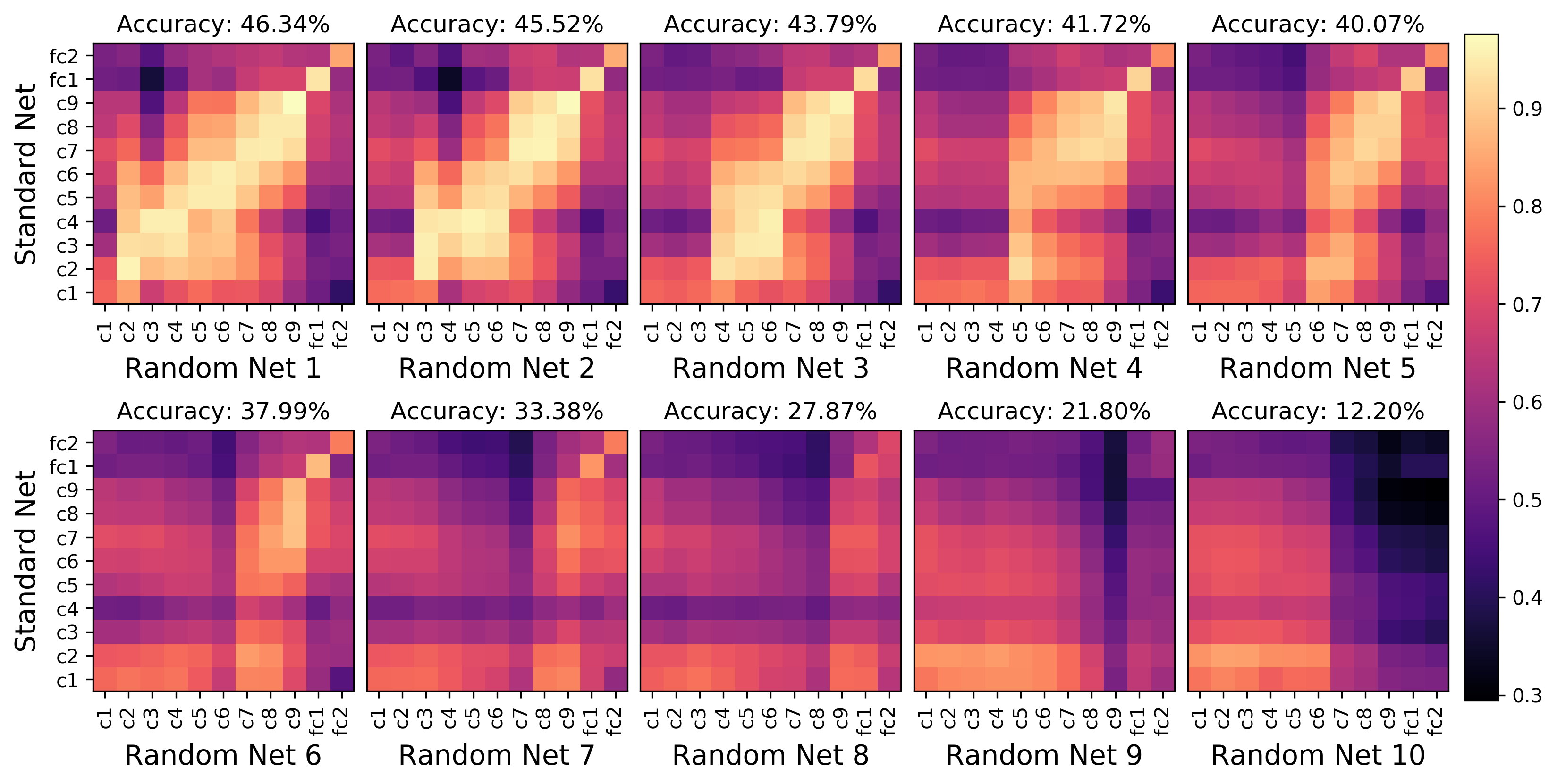

I’ll present a poster at the NeurIPS 2018 workshop on Interpretability and Robustness in Audio, Speech and Language. Check out our short paper “How transferable are features in convolutional neural network acoustic models across languages?”.

Tomorrow I will be presenting a poster at the Cognitive Computational Neuroscience (CCN) conference entitled “Towards a theory of explanation for biological and artificial intelligence”. Here is the poster along with the short paper that goes with it, as well as some slides that I used in a previous presentation.

Part I: Integration of deep learning and neuroscience

Custom workstation build

20 Mar 2017

Last weekend I built a computer. This machine was designed as a workstation for my research, considering the resources needed for the analysis and modelling of large 7T fMRI data and the training/testing of deep neural networks. I used PCPartPicker to help me test out different configurations and check for compatibility issues. Here is the parts list:

How many permutations should you perform?

10 Aug 2016

Non-parametric permutation tests or randomization tests are often recommended because they require fewer assumptions of the data. In the recent paper by Eklund et al. non-parametric permutation testing was the only method that consistently gave false positive rates in the expected range.

Summary: A Practical Guide for Improving Transparency and Reproducibility in Neuroimaging Research

28 Jul 2016

Three pillars of Open Science: data, code, and papers.